Foundation Model Engineering

Master the Design, Training, and Deployment of Large-Scale Foundation Models for AI Applications Price Match Guarantee

Full Lifetime Access

Access on any Device

Technical Support

Secure Checkout

Course Completion Certificate

Price Match Guarantee

Full Lifetime Access

Access on any Device

Technical Support

Secure Checkout

Course Completion Certificate

97% Started a new career

BUY THIS COURSE (

97% Started a new career

BUY THIS COURSE (GBP 12 GBP 29 )-

86% Got a pay increase and promotion

86% Got a pay increase and promotion

Students also bought -

-

- AI Agents for Business Leaders

- 10 Hours

- GBP 12

- 10 Learners

-

- Edge AI: Building Intelligent Systems at the Edge

- 10 Hours

- GBP 12

- 10 Learners

-

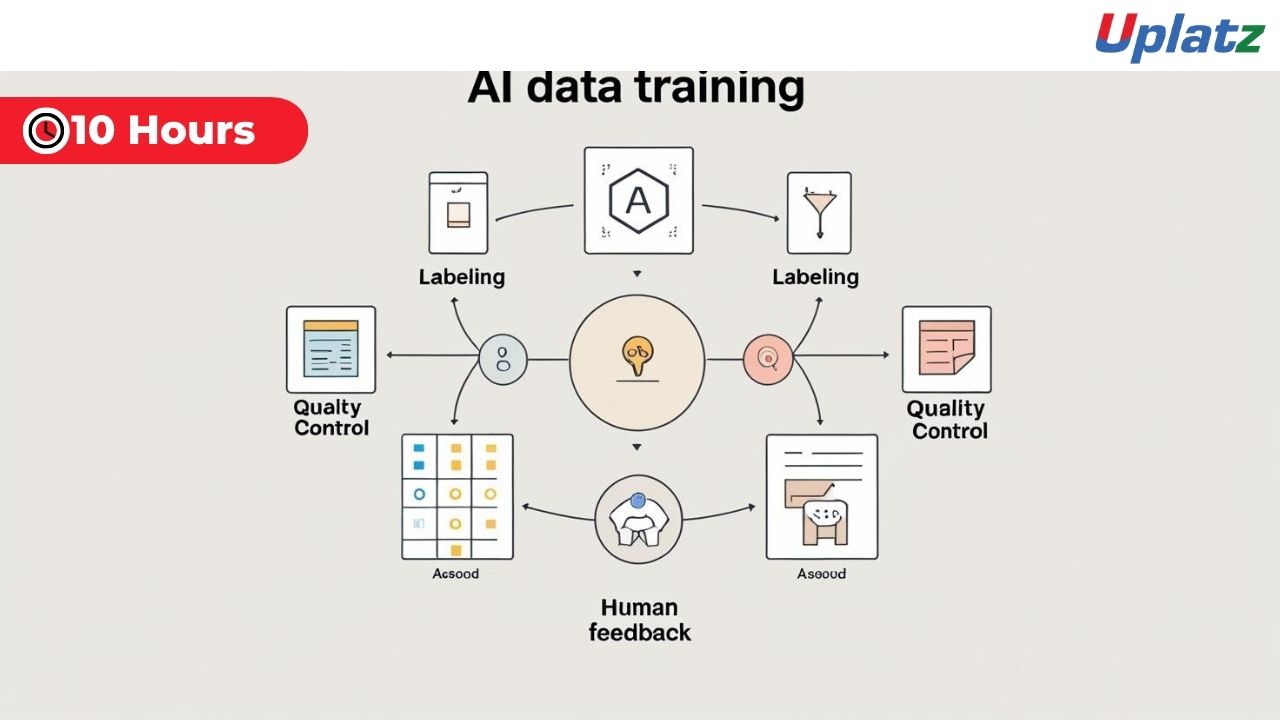

- AI Data Training: Labeling, Quality, and Human Feedback Engineering

- 10 Hours

- GBP 12

- 10 Learners

Foundation Model Engineering is at the heart of today’s most powerful AI breakthroughs. From conversational AI and search engines to image generation and multimodal assistants, foundation models such as GPT, LLaMA, PaLM, CLIP, Whisper, and Gemini now power a wide range of intelligent applications. These models are reshaping industries, redefining human–computer interaction, and enabling technologies previously considered impossible.

The Foundation Model Engineering course by Uplatz provides comprehensive training on the design, training, optimization, and deployment of large-scale AI systems. This course blends theoretical depth with hands-on practice, guiding learners through the core components of transformer-based architectures, large-scale pretraining, distributed training strategies, low-cost fine-tuning, model compression, inference optimization, and real-world deployment.

This course is ideal for machine learning engineers, data scientists, AI researchers, DevOps/ML Ops specialists, and any professional seeking advanced expertise in building state-of-the-art AI systems.

🔍 What is Foundation Model Engineering?

Foundation Model Engineering refers to the techniques, architectures, and tools used to build extremely large AI models capable of generalizing across multiple domains. These models are trained on broad datasets (text, images, audio, video, or multimodal collections) and learn representations that can be adapted to many downstream tasks.

Key examples include:

-

Large Language Models (LLMs): GPT, LLaMA, PaLM, Mistral

-

Vision Transformers (ViTs): DINO, MAE, SAM

-

Multimodal Models: CLIP, Gemini, Flamingo, LLaVA

-

Speech Models: Whisper, SpeechT5

-

Diffusion Models: Stable Diffusion, Imagen

What makes foundation models unique is their ability to transfer knowledge, enabling rapid fine-tuning for tasks like sentiment analysis, summarization, classification, retrieval, question answering, image captioning, and more.

Foundation Model Engineering covers:

-

Transformer architecture and self-attention

-

Tokenization & embedding techniques

-

Pretraining objectives (causal, masked, contrastive)

-

Efficient parallelization (tensor, pipeline, data, sequence parallelism)

-

Optimizers (AdamW, Adafactor, Lion)

-

Scaling laws & compute–data trade-offs

-

Fine-tuning (LoRA, QLoRA, PEFT, adapters)

-

Model distillation, pruning & quantization

-

High-performance inference serving

This course provides the technical and practical roadmap for designing models that are scalable, efficient, and deployable in real-world environments.

⚙️ How Foundation Model Engineering Works in Practice

This course demystifies the full lifecycle of foundation model development:

-

Model Architecture Design

-

Understanding transformers, self-attention, and encoder–decoder structures

-

Position embeddings, multi-head attention, feed-forward networks

-

Scaling architectures (Mixture-of-Experts, Sparse Attention)

-

-

Pretraining at Scale

-

Objective functions: Causal LM, MLM, contrastive learning

-

Data pipelines for terabyte-scale datasets

-

Tokenization & vocabulary construction

-

Handling long-context models

-

-

Distributed Training

-

Data, tensor, pipeline, expert parallelism

-

DeepSpeed, Megatron-LM, FSDP, ZeRO

-

Gradient checkpointing, mixed precision (FP16, BF16, FP8)

-

-

Fine-Tuning & Adaptation

-

LoRA and low-rank adaptation

-

QLoRA for 4-bit training

-

PEFT, Prefix Tuning, Prompt Tuning

-

Instruction tuning & RLHF (Reinforcement Learning from Human Feedback)

-

-

Model Evaluation & Alignment

-

Benchmarks: MMLU, BigBench, GLUE, ImageNet

-

Safety alignment & adversarial testing

-

Human-in-the-loop evaluation

-

-

Inference & Deployment

-

ONNX Runtime, TensorRT, vLLM, DeepSpeed-Inference

-

Quantization (8-bit, 4-bit, 2-bit)

-

Optimizing throughput & latency

-

Scaling inference across GPUs or server clusters

-

-

MLOps & Monitoring

-

Model versioning, drift detection

-

API deployment, load balancing

-

Continuous evaluation for bias, toxicity, and safety

-

This structured approach prepares learners to contribute to real foundation-model projects in AI labs and enterprise production systems.

🏭 Industry Applications of Foundation Models

Foundation models have become the backbone of innovation across multiple industries:

-

Healthcare: Medical summarization, radiology image analysis, clinical documentation

-

Finance: Fraud detection, investment insights, automated advisors

-

E-commerce: Personalized recommendations, product search, chatbot automation

-

Media & Marketing: Content generation, sentiment analysis, asset tagging

-

Autonomous Systems: Vision-based navigation, multimodal perceptual understanding

-

Education: Intelligent tutoring systems, personalized learning pathways

-

Law & Compliance: Document summarization, contract review

-

Customer Service: Virtual agents, support automation, conversational AI

Organizations like Google DeepMind, Meta AI, OpenAI, Apple, NVIDIA, Hugging Face, Stability AI, Microsoft, Tesla, and Anthropic are actively investing in foundation model research — making this one of the most sought-after skills in the AI workforce.

🌟 Benefits of Learning Foundation Model Engineering

Mastering foundation model engineering offers unmatched advantages:

-

High-Demand Expertise: Few professionals have deep knowledge of LLMs, transformers, and large-scale AI — making this a premium skill.

-

Cutting-Edge Skillset: Learn how GPT-level systems are built, optimized, and deployed.

-

Cross-Domain Capability: Use foundation models for NLP, CV, speech, and multimodal tasks.

-

Hands-On Experience: Gain practical proficiency in PyTorch, Hugging Face, and distributed training frameworks.

-

Career Growth: Opens roles in ML engineering, AI research, MLOps, LLMOps, and applied AI.

-

Real-World Impact: Build scalable models for enterprise-level automation, analysis, and innovation.

-

Optimization & Cost Savings: Learn techniques to fine-tune and deploy models efficiently even with limited compute.

Foundation model expertise is one of the most future-proof technology skills for the next decade.

📘 What You’ll Learn in This Course

This course covers the entire lifecycle of foundation model development:

-

Transformer architecture & attention mechanisms

-

Tokenization, embeddings, and pretraining datasets

-

Model scaling laws and compute–data trade-offs

-

Distributed training (DeepSpeed, FSDP, ZeRO, Megatron)

-

GPT-style causal language modeling

-

Vision Transformers & multimodal encoders

-

Fine-tuning (LoRA, PEFT, QLoRA, adapters)

-

Building models using PyTorch & Hugging Face Transformers

-

Evaluating and benchmarking foundation models

-

Deployment using ONNX Runtime, vLLM, TensorRT

-

Quantization and model compression

-

Serving foundation models as APIs

-

Safety evaluation, alignment & governance considerations

By the end, you will be able to architect, train, and deploy foundation models confidently.

🧠 How to Use This Course Effectively

To get the best learning experience:

-

Start with Transformer Basics – Understand self-attention deeply.

-

Follow Hands-On Labs – Experiment with small and mid-size models.

-

Build Training Pipelines – Try distributed training with sample code.

-

Practice Fine-Tuning – Apply LoRA, QLoRA, and PEFT on real datasets.

-

Analyze Scaling Laws – Understand compute budgeting & model growth.

-

Optimize Inference – Use quantization and efficient runtime techniques.

-

Deploy Your Model – Expose your fine-tuned model as an API.

-

Complete the Capstone Project – Build a production-ready foundation model pipeline.

👩💻 Who Should Take This Course

This course is ideal for:

-

Machine Learning Engineers

-

AI Researchers

-

Data Scientists

-

MLOps / LLMOps Engineers

-

AI Product Developers

-

Computational Linguists

-

Cloud/DevOps Engineers working with AI workloads

-

Anyone transitioning into advanced AI engineering

Intermediate Python and ML knowledge is recommended, but full beginners can follow with guidance.

🧩 Course Format & Certification

This self-paced course includes:

-

HD video lectures

-

Hands-on coding labs

-

Distributed training exercises

-

Multi-framework demos (PyTorch, Hugging Face)

-

Quizzes & practical checkpoints

-

Downloadable notebooks

-

Lifetime access

-

A Uplatz Course Completion Certificate

You will finish with a portfolio-ready foundation model deployment project.

🚀 Why This Course Stands Out

-

Covers foundation models end-to-end (architecture → training → deployment)

-

Includes real hands-on labs with modern frameworks

-

Provides strong grounding in distributed and scalable training

-

Teaches industry-leading fine-tuning and optimization techniques

-

Includes safety, ethics, and alignment considerations

-

Prepares learners for high-demand roles in LLM engineering and applied AI

This course bridges research-level depth with industry-ready implementation.

🌐 Final Takeaway

Foundation models are redefining artificial intelligence — powering the most advanced chatbots, search engines, vision systems, recommendation engines, and multimodal assistants. Understanding how these models are engineered is no longer optional for professionals in AI; it is becoming a core requirement.

The Foundation Model Engineering course by Uplatz provides the complete blueprint for designing, training, scaling, optimizing, and deploying large-scale AI models. With a blend of technical rigor and practical application, this course prepares you to build the next generation of intelligent systems.

Start learning today and become a creator — not just a user — of state-of-the-art AI models.

-

Understand the architecture and mechanics of transformer-based models.

-

Learn the principles behind pretraining and fine-tuning large models.

-

Explore scaling laws and efficiency techniques for large-scale AI.

-

Use distributed training frameworks like PyTorch DDP and DeepSpeed.

-

Implement fine-tuning methods such as LoRA and PEFT.

-

Optimize inference using quantization and pruning.

-

Evaluate model performance using benchmark datasets.

-

Deploy foundation models as APIs or cloud microservices.

-

Understand model alignment and safety mechanisms.

-

Prepare for research or engineering roles in large-scale AI model development.

Course Syllabus

Module 1: Introduction to Foundation Models

Module 2: Transformer Architecture and Attention Mechanisms

Module 3: Pretraining Objectives and Data Preparation

Module 4: Scaling Laws, Compute, and Optimization Strategies

Module 5: Fine-Tuning Techniques – LoRA, PEFT, and RLHF

Module 6: Distributed Training and Parallelization

Module 7: Model Compression, Quantization, and Pruning

Module 8: Evaluation, Benchmarking, and Bias Analysis

Module 9: Deployment Strategies – APIs, Containers, and Cloud Inference

Module 10: Capstone Project – Fine-Tuning and Serving a Foundation Model

Upon successful completion, learners receive a Certificate of Completion from Uplatz, validating their expertise in Foundation Model Engineering. This Uplatz certification demonstrates proficiency in the architecture, training, and deployment of large-scale AI models used in modern generative and predictive systems.

The certification aligns with cutting-edge practices in LLM development, MLOps, and AI model optimization. It is ideal for AI engineers, data scientists, and researchers aiming to build or customize foundation models for text, image, or multimodal applications.

This certificate highlights your readiness to work on high-impact projects in AI product development, applied research, and enterprise-level deployment of large-scale models.

The global demand for professionals skilled in foundation model engineering is skyrocketing, as enterprises and research labs race to build and fine-tune proprietary models.

After completing this course from Uplatz, learners can pursue roles such as:

-

AI Research Engineer

-

LLM Developer / Model Fine-Tuning Specialist

-

MLOps Engineer (LLM Deployment)

-

AI Infrastructure Engineer

-

Applied Scientist (Generative AI)

Professionals in this domain earn between $120,000 and $220,000 per year, with even higher salaries at leading AI labs and startups.

Career opportunities exist in organizations working on large-scale AI platforms, generative AI startups, enterprise AI integration, and academic research labs. This course equips you with the ability to engineer foundation models responsibly, efficiently, and at scale — the core skill set defining the future of AI innovation.

-

What is a Foundation Model?

A large AI model trained on diverse data capable of generalizing across multiple tasks. -

What architecture underlies most foundation models?

The Transformer architecture, featuring self-attention mechanisms and multi-layer networks. -

What are scaling laws in AI?

Empirical relationships describing how model performance scales with data, parameters, and compute. -

What is LoRA fine-tuning?

Low-Rank Adaptation — a parameter-efficient fine-tuning method for large models. -

What is the role of pretraining in LLMs?

To learn general linguistic or multimodal representations before fine-tuning for specific tasks. -

What is quantization in AI models?

Reducing numerical precision (e.g., FP32 → INT8) to make inference faster and more efficient. -

How does RLHF improve model performance?

Reinforcement Learning from Human Feedback aligns model outputs with human preferences. -

What tools are used to train foundation models?

PyTorch, TensorFlow, DeepSpeed, Megatron-LM, and Hugging Face Transformers. -

What is the main challenge of training foundation models?

Managing computational cost, data quality, and ethical considerations at scale. -

How are foundation models deployed efficiently?

Using techniques like quantization, sharding, caching, and serving via scalable inference APIs.