LangChain and Vector Databases

Harness LangChain and Vector Databases to build intelligent, memory-aware, and retrieval-augmented AI applications with ease.Preview LangChain and Vector Databases course

Price Match Guarantee Full Lifetime Access Access on any Device Technical Support Secure Checkout Course Completion Certificate 91% Started a new career

BUY THIS COURSE (

91% Started a new career

BUY THIS COURSE (GBP 12 GBP 29 )-

83% Got a pay increase and promotion

83% Got a pay increase and promotion

Students also bought -

-

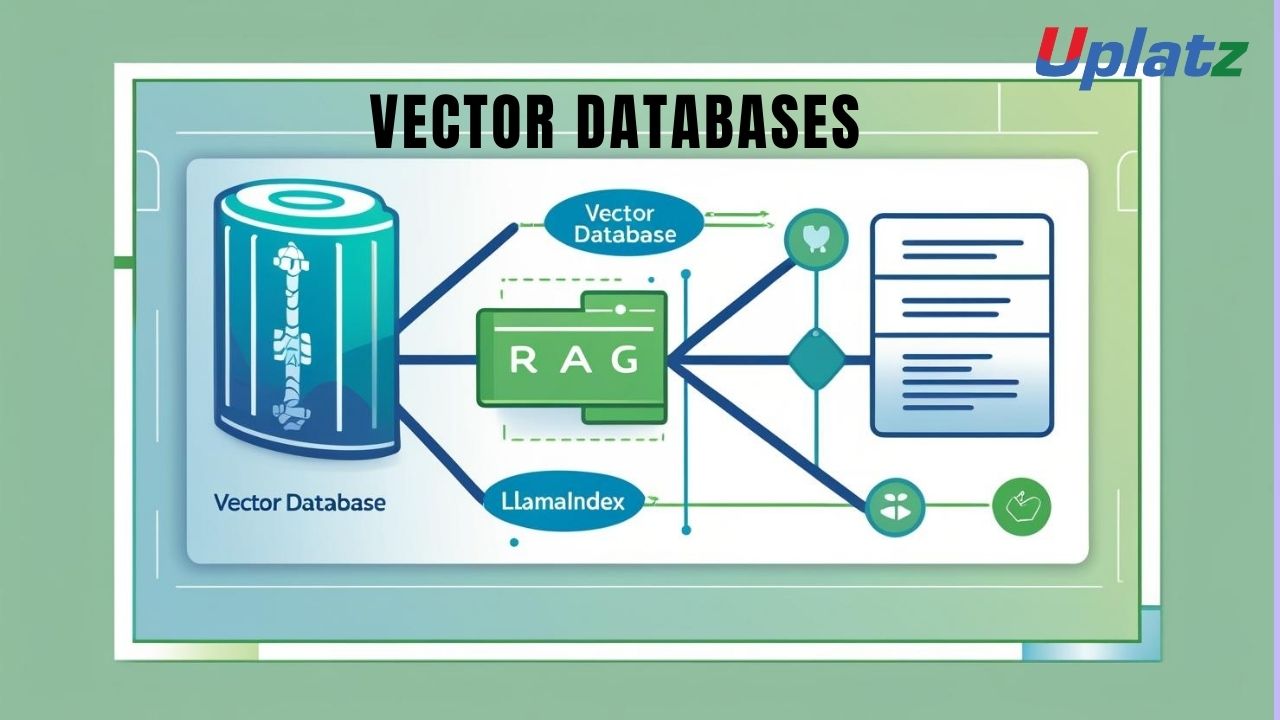

- Vector Databases & RAG (Retrieval-Augmented Generation) with LlamaIndex

- 10 Hours

- GBP 29

- 10 Learners

-

- MongoDB

- 15 Hours

- GBP 12

- 259 Learners

-

- SQL Programming with Microsoft SQL Server

- 55 Hours

- GBP 29

- 5739 Learners

The next generation of artificial intelligence (AI) applications is founded not only on powerful language models but also on the frameworks and databases that empower these models with memory, context, and reasoning. This self-paced course on LangChain and Vector Databases has been designed for developers, data engineers, AI enthusiasts, and solution architects who aim to master the essential tools that bridge large language models (LLMs) with dynamic, queryable, and context-rich data environments. As AI systems evolve from simple text generators to intelligent agents capable of understanding and interacting with vast knowledge repositories, the synergy between LangChain and vector databases becomes crucial in creating scalable and intelligent solutions.

LangChain serves as a powerful orchestration framework that allows seamless integration of LLMs such as OpenAI’s GPT, Anthropic’s Claude, or Meta’s LLaMA with external tools, APIs, and memory systems. It enables developers to construct chainable workflows where LLMs can not only generate text but also reason, retrieve information, take actions, and store contextual knowledge. Vector databases, in contrast, are specialized data stores designed for managing high-dimensional embeddings that represent semantic meaning. They are optimized for similarity-based retrieval, allowing systems to search and respond based on meaning rather than keywords—an essential feature for retrieval-augmented generation (RAG) and other modern AI applications.

This course provides an in-depth, hands-on exploration of how these technologies combine to create intelligent, context-aware AI systems. Learners will gain a deep understanding of how to design, build, and deploy scalable AI pipelines capable of interacting with unstructured and semi-structured data sources. By integrating LLMs with vector databases and retrieval mechanisms, participants will learn to build systems that can retain memory, perform semantic search, and deliver highly contextual responses.

Foundations of LangChain and Vector Databases

To appreciate the power of LangChain and vector databases, it is essential to understand the unique roles they play in the AI ecosystem.

LangChain is a modular and extensible Python framework developed to simplify the process of building context-aware AI applications using LLMs. Its architecture is based on several key components:

-

Chains: These define sequences of operations that allow the model to process input, retrieve data, and generate responses in a structured workflow.

-

Agents: Intelligent intermediaries that can reason about which tools or actions to take based on a user’s request.

-

Tools and APIs: External functions or data sources that extend an LLM’s capability beyond text generation, allowing real-world interactions.

-

Memory and Retrieval: Mechanisms that enable the model to retain information across conversations and reference past interactions.

On the other hand, vector databases such as Pinecone, Chroma, Weaviate, and FAISS are engineered to handle high-dimensional vector embeddings, which represent textual or multimedia information in a mathematical space. By storing and indexing these embeddings, vector databases make it possible to perform semantic search—retrieving content that is conceptually similar to a given query rather than relying on exact keyword matches. This allows AI systems to access the most relevant information quickly, supporting use cases such as document question-answering, chat memory retrieval, and personalized recommendations.

Together, LangChain and vector databases create an ecosystem where LLMs can retrieve and reason over structured knowledge, allowing for precise, context-driven outputs. This combination forms the basis for applications like chatbots with persistent memory, intelligent document assistants, and enterprise-level knowledge management systems.

Course Approach and Learning Journey

This course adopts a progressive, application-driven structure, ensuring that learners develop both conceptual clarity and hands-on expertise. The learning experience begins with the fundamentals of embeddings, chains, and semantic search, helping participants understand how natural language data is represented in vector space and retrieved effectively.

The early modules focus on how LangChain fits into the LLM ecosystem, highlighting its role as a bridge between generative models and real-world data sources. Participants will explore the mechanisms of chaining, agent orchestration, and memory management. The course also introduces learners to the concept of retrieval-augmented generation (RAG)—a powerful method for enhancing LLM responses with up-to-date or domain-specific information retrieved from external databases.

Building on this foundation, the course transitions into hands-on project work. Learners will design and implement practical systems such as:

-

Chatbots with memory, capable of maintaining context across conversations using vector-based retrieval.

-

Document question-answering systems, where users can query large corpora and receive contextually relevant summaries.

-

Knowledge graph integrations, enabling structured reasoning over interconnected data.

-

Intelligent agents, which use LangChain to plan and execute multi-step operations involving multiple data sources.

These projects are designed to provide not just technical experience but also a deep understanding of design patterns, scalability challenges, and best practices in AI system engineering.

Tools and Techniques

Throughout the course, learners will work with widely used tools and libraries from the AI ecosystem. They will integrate LangChain with vector stores such as Pinecone, ChromaDB, Weaviate, and FAISS, alongside LLMs from OpenAI and Hugging Face. Each tool is introduced within a real-world context, helping participants understand its purpose and value in building production-grade AI systems.

The course covers important implementation strategies such as prompt engineering, document chunking, metadata filtering, and hybrid search. Learners will also gain experience in managing and optimising vector storage and retrieval efficiency, balancing accuracy with performance at scale. These concepts are essential for developers building retrieval-based applications or intelligent search tools for enterprises.

As learners progress, they will also explore evaluation and testing methodologies for AI systems. This includes assessing the accuracy and relevance of retrieved information, monitoring latency, and evaluating overall system performance. By understanding these principles, learners will be equipped to build robust, reliable, and explainable AI applications that can operate in dynamic environments.

Capstone and Real-World Application

The course culminates in capstone projects that allow learners to integrate all learned concepts into full-stack intelligent systems. Participants will design, deploy, and serve retrieval-augmented AI applications that combine LLM reasoning, vector storage, and long-term memory. Examples include:

-

A knowledge assistant that continuously learns from new data.

-

A customer support copilot that draws insights from internal documentation.

-

A data intelligence dashboard that queries and summarizes information from multiple sources using semantic search.

These projects simulate real-world production workflows, helping learners understand not only the technical architecture but also the deployment strategies, including API hosting, server scaling, and data security considerations. By completing these exercises, learners will have the confidence to apply LangChain and vector database concepts in enterprise-grade environments.

Why Learn LangChain and Vector Databases?

In today’s AI-driven landscape, organisations increasingly seek systems that can think contextually, remember information, and provide relevant answers across interactions. Static, one-time responses are no longer sufficient. Mastering LangChain and vector databases enables professionals to design systems that extend the intelligence of LLMs—allowing them to connect with real data, maintain memory, and generate results that are both coherent and contextually informed.

This course equips learners with the critical skills needed to bridge the gap between language and knowledge, creating AI systems that are dynamic, adaptive, and enterprise-ready. As industries continue to shift towards retrieval-augmented and memory-driven architectures, expertise in LangChain and vector databases stands as one of the most in-demand capabilities for AI professionals in 2025 and beyond.

Course/Topic 1 - Coming Soon

-

The videos for this course are being recorded freshly and should be available in a few days. Please contact info@uplatz.com to know the exact date of the release of this course.

-

Understand the architecture and use cases of LangChain.

-

Work with vector databases like FAISS, Pinecone, and ChromaDB.

-

Generate embeddings from text using OpenAI, HuggingFace, and Cohere.

-

Implement semantic search and similarity matching.

-

Build Retrieval-Augmented Generation (RAG) pipelines.

-

Create chatbots with memory and document-based Q&A systems.

-

Integrate tools and agents using LangChain agents and tools modules.

-

Handle chunking, indexing, and metadata filtering in vector stores.

-

Deploy LangChain apps with Streamlit, FastAPI, or Flask.

-

Apply LangChain to production use cases like customer support, legal research, and educational assistants.

- LLM stack overview

- What is LangChain?

- Why vector databases?

- Word, sentence, and document embeddings

- Tools: OpenAI, Cohere, HuggingFace

- Converting text to vector

- Chains and memory

- Prompt templates

- Simple LLM chain and sequential chains

- FAISS, Chroma, Pinecone, Weaviate

- Indexing and similarity metrics

- Creating and querying vector stores

- Document ingestion and chunking

- Similarity search and filtering

- Retrieval-Augmented Generation with LangChain

- Uploading and chunking documents

- Embedding generation and indexing

- Contextual question answering pipeline

- Tool integration (Google Search, WolframAlpha, APIs)

- Creating and managing agents

- Agent decision making and tool use

- BufferMemory, ConversationSummaryMemory, VectorStoreMemory

- Stateful chat systems

- Context persistence

- Combining keyword and semantic search

- Filtering results based on metadata tags

- Improving relevance in retrieval pipelines

- Serving with FastAPI, Flask, or Streamlit

- Caching and latency optimization

- Logging, monitoring, and rate limits

- APIs, CRMs, knowledge bases

- Connecting LangChain with Zapier, Notion, Slack, and more

- Private LLMs vs. hosted models

-

Build a full-stack knowledge agent

-

Document upload, RAG, chat interface, tool access

-

Deploy to cloud (Render, Vercel, or AWS)

On successful completion of this course, learners will earn a Certificate of Completion from Uplatz that validates their practical expertise in LangChain and vector databases. This credential affirms your readiness to build, optimize, and deploy AI systems powered by memory, retrieval, and intelligent data access. As AI workflows move from stateless prompts to persistent, context-rich interactions, this certification equips you with the tools and insights to stay ahead. Whether you’re seeking a role in applied AI development, LLMOps, or building next-gen intelligent products, this certification will distinguish your profile. With real-world projects and integration experience, the certificate reflects both architectural understanding and implementation skills using cutting-edge open-source tools.

- AI Application Developer

- LLM Engineer

- Vector Search Engineer

- ML Solutions Architect

- Conversational AI Developer

- Semantic Search Specialist

- Full-Stack AI Engineer

- Data Engineer (AI-focused)

LangChain is a Python framework for building LLM applications that require memory, context, tools, and data integration. It abstracts LLM workflows and allows chaining and retrieval.

A vector database stores high-dimensional vectors and allows fast similarity search for use cases like semantic search and document retrieval.

RAG is a technique where LLMs retrieve relevant documents or context from a vector store before generating responses, improving accuracy and grounding.

Embeddings are vector representations of text; they allow comparing semantic similarity between queries and stored content using cosine or dot product.

LangChain supports FAISS, Chroma, Pinecone, Weaviate, Qdrant, and others.

Memory components store past user inputs, summaries, or embeddings to create contextual and stateful interactions in AI applications.

Agents use tools and reasoning loops to decide how to fulfill tasks, making them ideal for complex workflows with API calls and document search.

Chunking splits large documents into smaller parts for better embedding generation and search performance in vector databases.

It allows filtering vector search results based on tags like document type, source, or timestamp, improving relevance.

They can be deployed as APIs using FastAPI or Flask, or served via Streamlit/UIs, and containerized for platforms like AWS, GCP, or Vercel.