Responsible AI & AI Governance

Learn to design, deploy, and manage AI systems responsibly through ethics, compliance, and governance frameworks. Price Match Guarantee

Full Lifetime Access

Access on any Device

Technical Support

Secure Checkout

Course Completion Certificate

Price Match Guarantee

Full Lifetime Access

Access on any Device

Technical Support

Secure Checkout

Course Completion Certificate

90% Started a new career

BUY THIS COURSE (

90% Started a new career

BUY THIS COURSE (GBP 12 GBP 29 )-

78% Got a pay increase and promotion

78% Got a pay increase and promotion

Students also bought -

-

- Responsible AI: Bias, Fairness, and Explainability in ML

- 10 Hours

- GBP 29

- 10 Learners

-

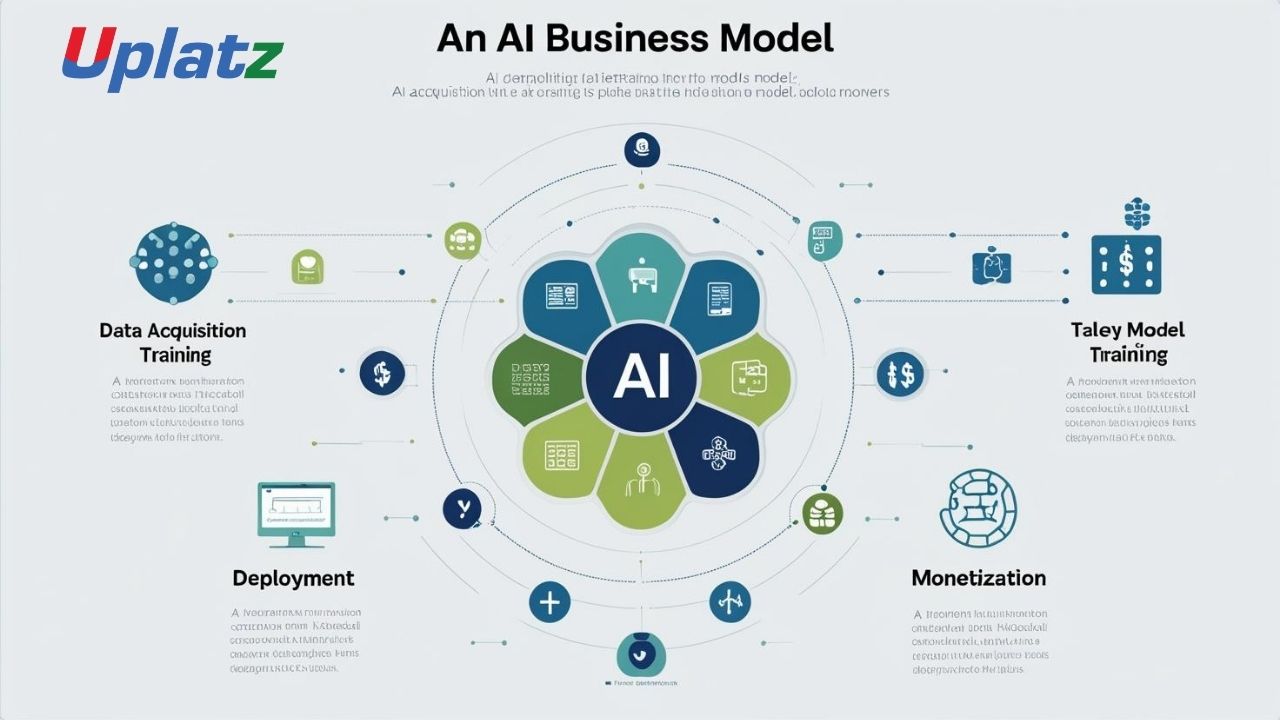

- AI Business Model Design

- 10 Hours

- GBP 29

- 10 Learners

-

- AI Cybersecurity

- 10 Hours

- GBP 29

- 10 Learners

Responsible AI & AI Governance – Build Trustworthy, Transparent, and Ethical AI Systems

Responsible AI & AI Governance is a comprehensive course designed to equip AI practitioners, policy makers, and business leaders with the knowledge and tools to ensure that artificial intelligence is developed and deployed responsibly. This course explores the ethical, legal, and societal dimensions of AI, focusing on building systems that are transparent, fair, accountable, and aligned with human values.

As AI becomes increasingly integrated into decision-making across industries, the need for governance frameworks that promote ethical conduct, fairness, and risk mitigation has never been more critical. This course bridges the gap between AI innovation and AI regulation, providing practical frameworks to ensure compliance with standards such as EU AI Act, GDPR, NIST AI Risk Management Framework, and OECD AI Principles.

Through a structured learning approach, learners will understand the principles of responsible AI—covering ethics, interpretability, bias mitigation, data privacy, accountability, and organizational governance. The course also explores how to build internal governance structures, establish audit mechanisms, and implement AI ethics at scale.

What You Will Gain

By the end of the course, you will have developed a deep understanding of how to design and manage AI systems responsibly, including:

- A Comprehensive AI Ethics Framework for your organization or project.

- A Governance and Compliance Blueprint aligning with regulatory standards.

- A Bias Detection and Mitigation Plan for data and models.

- A Transparency and Accountability Dashboard for monitoring AI performance.

You will learn how to:

- Identify ethical challenges and risks in AI development and deployment.

- Apply fairness, accountability, and transparency principles to AI systems.

- Build governance models aligned with international AI policy standards.

- Conduct AI audits and compliance assessments.

- Create responsible AI cultures within organizations.

Who This Course Is For

This course is ideal for:

- AI Practitioners & Data Scientists aiming to integrate responsible practices in model development.

- Compliance Officers & Policy Leaders establishing AI governance frameworks.

- Business Executives & Decision Makers managing ethical AI transformations.

- Researchers & Academics studying AI ethics, accountability, and trust.

- Students & Early Professionals interested in AI governance, policy, and regulation.

Whether you’re developing, deploying, or overseeing AI systems, this course provides the theoretical grounding and practical tools to ensure responsible and compliant AI practices.

Why Learn Responsible AI & AI Governance?

AI has the power to transform industries—but without proper governance, it can amplify bias, erode trust, and cause unintended harm. Responsible AI practices ensure that innovation remains human-centered, ethical, and transparent.

Learning Responsible AI & AI Governance helps you:

- Align AI innovation with ethical and legal standards.

- Prevent discrimination, bias, and unfair outcomes in automated decisions.

- Build trust through explainability and transparency.

- Develop governance mechanisms that ensure accountability and oversight.

- Position yourself at the forefront of AI policy, regulation, and leadership.

As global regulations mature and AI accountability becomes mandatory, professionals trained in Responsible AI are essential to organizational compliance and credibility.

By completing this course, learners will be able to:

- Understand key ethical principles and governance frameworks in AI.

- Apply fairness, transparency, and accountability standards in model design.

- Identify and mitigate algorithmic bias and discrimination.

- Establish risk management and compliance strategies.

- Implement AI audit processes and ethical impact assessments.

- Design governance models for ethical AI lifecycle management.

- Integrate Responsible AI principles into enterprise-level AI operations.

Course Syllabus

Module 1: Introduction to Responsible AI

Definition, scope, and importance of ethics and governance in AI systems.

Module 2: Core Principles of Responsible AI

Fairness, accountability, transparency, privacy, and human oversight.

Module 3: Global AI Ethics Frameworks

EU AI Act, OECD AI Principles, UNESCO Recommendations, and NIST AI RMF.

Module 4: AI Bias and Fairness

Types of bias, detection tools, mitigation strategies, and fairness metrics.

Module 5: Transparency and Explainability

Explainable AI (XAI) methods, model interpretability, and stakeholder communication.

Module 6: Accountability and Auditability

Internal governance, audit trails, documentation standards, and ethics review boards.

Module 7: Privacy and Data Governance

Data minimization, anonymization, consent management, and GDPR compliance.

Module 8: AI Risk Management

Risk identification, classification, and mitigation across AI lifecycle stages.

Module 9: Human-in-the-Loop (HITL) Systems

Ensuring human control, feedback loops, and oversight in AI decision-making.

Module 10: Regulatory Compliance and Standards

Understanding AI policy landscapes, ISO standards, and ethical certifications.

Module 11: Organizational AI Governance Models

Structures, committees, and roles for ethical and compliant AI operations.

Module 12: Trustworthy and Safe AI Systems

Designing AI systems for reliability, robustness, and user trust.

Module 13: AI Ethics by Design

Embedding ethical and fairness principles at each design and development stage.

Module 14: Social, Legal, and Economic Implications

AI’s impact on employment, human rights, and social equity.

Module 15: Governance for Generative and Autonomous AI

Addressing risks in large language models, generative AI, and autonomous systems.

Module 16: AI Audit and Impact Assessment

Creating internal AI audit frameworks and ethical impact assessment templates.

Module 17: Responsible AI in Practice

Case studies: Microsoft, Google, IBM, and regulatory applications.

Module 18: Building Responsible AI Culture

Organizational change management, training, and communication.

Module 19: Capstone Project – AI Governance Framework Design

Develop a governance framework for a fictional or real AI product.

Module 20: Responsible AI Interview Questions & Answers

Common interview questions for roles in Responsible AI, governance, and compliance.

Upon completion, learners will receive a Certificate of Excellence in Responsible AI & AI Governance from Uplatz.

This certificate verifies your understanding of ethical AI design, governance frameworks, and compliance strategies, positioning you as a trusted AI professional capable of leading responsible innovation initiatives.

Responsible AI professionals are in growing demand across government agencies, tech companies, consulting firms, and global enterprises. Completing this course opens career paths such as:

- Responsible AI Specialist

- AI Ethics & Governance Officer

- Compliance & Risk Manager (AI Systems)

- AI Policy Advisor

- Data Ethics Consultant

- Trust & Safety Engineer

With rising global regulations and organizational accountability requirements, expertise in Responsible AI and governance offers exceptional career security and global relevance.

- What is Responsible AI and why is it important?

Responsible AI ensures that AI technologies are developed ethically and safely, preventing bias, harm, and misuse. - What are the core principles of Responsible AI?

Fairness, accountability, transparency, privacy, reliability, and human oversight. - How does AI governance differ from AI ethics?

Ethics defines moral principles, while governance implements operational frameworks and compliance mechanisms. - What is bias in AI and how can it be mitigated?

Bias occurs when data or models unfairly favor outcomes; it’s mitigated through diverse datasets and bias-detection tools. - What is explainable AI (XAI)?

Techniques that make AI decision-making understandable to users and regulators. - What frameworks support AI governance globally?

EU AI Act, OECD Principles, NIST AI RMF, and ISO/IEC 42001:2023 AI Management Standard. - What is the role of human-in-the-loop systems?

They ensure that humans can intervene or guide AI decisions to maintain accountability and safety. - How does data privacy relate to Responsible AI?

Responsible AI enforces data protection laws like GDPR and ensures ethical data collection and processing. - What are common challenges in implementing AI governance?

Lack of standardization, limited interpretability, organizational resistance, and regulatory complexity. - Why are AI ethics and governance crucial for business sustainability?

They build trust, reduce reputational risk, ensure compliance, and support long-term innovation.