FAISS - Vector Similarity Search & Embedding Retrieval

Master FAISS for efficient vector similarity search and large-scale embedding-based retrieval to power AI, NLP, and recommendation systems.Preview FAISS - Vector Similarity Search & Embedding Retrieval course

Price Match Guarantee Full Lifetime Access Access on any Device Technical Support Secure Checkout Course Completion Certificate 88% Started a new career

BUY THIS COURSE (

88% Started a new career

BUY THIS COURSE (GBP 12 GBP 29 )-

83% Got a pay increase and promotion

83% Got a pay increase and promotion

Students also bought -

-

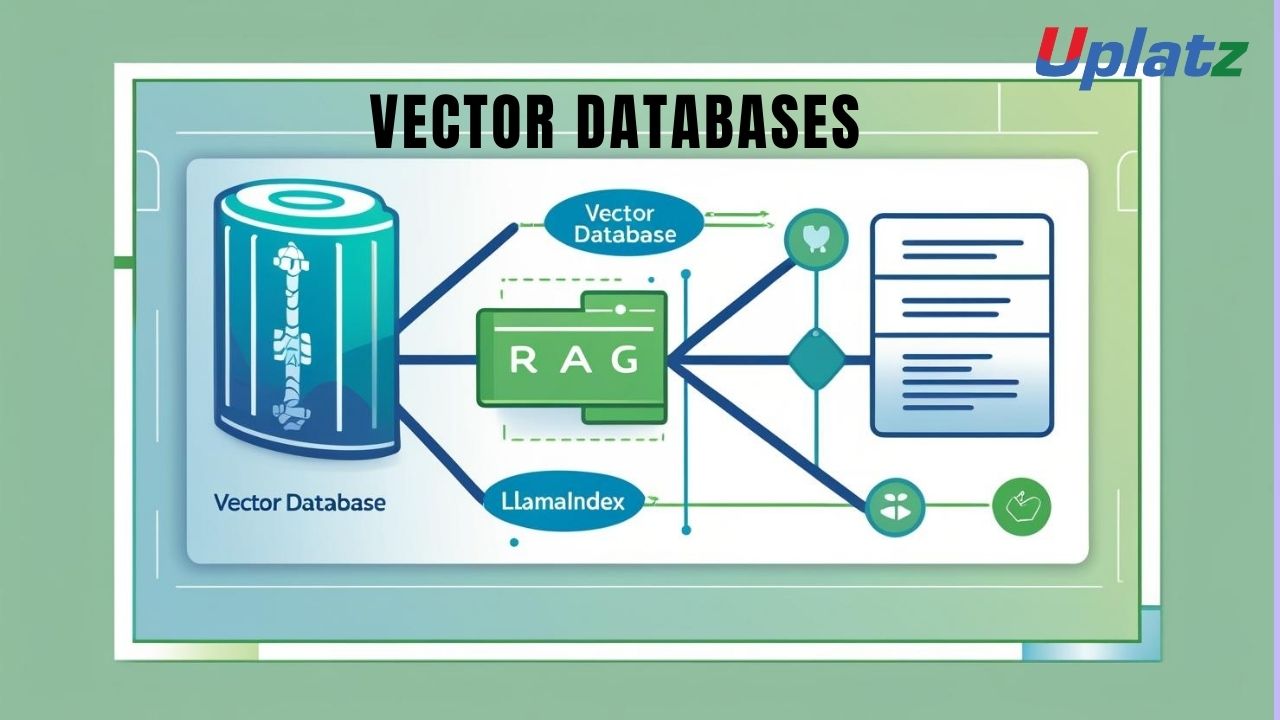

- Vector Databases & RAG (Retrieval-Augmented Generation) with LlamaIndex

- 10 Hours

- GBP 29

- 10 Learners

-

- Vector Search: Powering Semantic Retrieval and AI Applications

- 10 Hours

- GBP 29

- 10 Learners

-

- LangChain and Vector Databases

- 10 Hours

- GBP 12

- 10 Learners

- Start with Fundamentals: Understand embeddings, similarity metrics (cosine, Euclidean), and ANN search basics.

- Hands-On Practice: Work with FAISS in Python to create indexes, insert vectors, and query results.

- Experiment with Index Types: Learn the differences between Flat, IVF, PQ, and HNSW indexes.

- Leverage GPU Acceleration: Practice deploying FAISS with GPU for large-scale search.

- Integrate with AI Tools: Combine FAISS with LangChain, Hugging Face models, and OpenAI embeddings.

- Apply to RAG Pipelines: Use FAISS to store and retrieve knowledge base vectors for LLM applications.

- Optimize for Scale: Learn strategies for memory efficiency, sharding, and distributed FAISS.

- Work on Real Projects: Build semantic search engines, recommendation systems, and vector-based chatbots.

- Benchmark and Tune: Measure query speed and accuracy trade-offs in different FAISS configurations.

- Document and Reuse: Keep reusable code templates and deploy FAISS pipelines in production systems.

Course/Topic 1 - Coming Soon

-

The videos for this course are being recorded freshly and should be available in a few days. Please contact info@uplatz.com to know the exact date of the release of this course.

-

Understand vector similarity search and its role in modern AI workflows.

-

Install, configure, and use FAISS for vector indexing and retrieval.

-

Work with different FAISS index types (Flat, IVF, PQ, HNSW).

-

Implement Approximate Nearest Neighbor (ANN) search for scalability.

-

Integrate FAISS with embeddings from OpenAI, Hugging Face, and sentence transformers.

-

Build semantic search engines and recommendation pipelines.

-

Optimize FAISS for performance using GPU acceleration.

-

Deploy FAISS indexes in production AI pipelines and RAG workflows.

-

Handle billion-scale vector datasets efficiently.

-

Apply FAISS in enterprise-grade AI systems for NLP and search applications.

- Understanding Embeddings and Similarity Metrics

- Why FAISS for Large-Scale Search?

- FAISS Architecture Overview

- Installation (CPU and GPU Versions)

- Python API Basics

- Building a Simple Flat Index

- Exact Search vs Approximate Nearest Neighbor (ANN)

- Cosine Similarity vs Euclidean Distance

- Indexing and Query Basics

- Flat (Brute Force Search)

- Inverted File (IVF) Indexes

- Product Quantization (PQ)

- Hierarchical Navigable Small World (HNSW) Graphs

- Using FAISS with GPU for Speedup

- Hybrid CPU-GPU Workflows

- Handling Billion-Scale Datasets

- OpenAI Embeddings + FAISS

- LangChain VectorStore Integration

- Hugging Face Sentence Transformers

- Creating a Document Embedding Pipeline

- Querying for Semantic Similarity

- Ranking and Relevance Scoring

- Retrieval-Augmented Generation with FAISS

- Using FAISS as a Knowledge Base for LLMs

- Combining FAISS with LangChain Agents

- Reducing Memory Footprint

- Index Sharding and Distributed FAISS

- Balancing Accuracy vs Speed in ANN Search

- News Article Semantic Search Engine

- Product Recommendation System with FAISS

- Chatbot Knowledge Retrieval Pipeline

- Deploying FAISS in Production (APIs and Microservices)

- Monitoring and Scaling Strategies

- Security and Compliance Considerations

Upon completion, learners will receive a Certificate of Completion from Uplatz, demonstrating expertise in FAISS-based vector similarity search and retrieval. This certification validates your ability to implement embedding-based search engines, optimize retrieval pipelines, and integrate FAISS into AI-powered systems. It is highly valuable for professionals working on NLP, search, recommendation engines, or LLMOps (Retrieval-Augmented Generation pipelines).

- AI Engineer / LLM Developer

- Machine Learning Engineer (Search & NLP)

- Data Scientist (Information Retrieval Focus)

- LLMOps Engineer (RAG Pipelines)

- Recommendation Systems Engineer

- Build high-performance embedding search pipelines.

- Integrate retrieval capabilities into LLM applications.

- Handle billion-scale vector datasets efficiently for production.

- What is FAISS, and what problem does it solve?

FAISS is an open-source library for efficient similarity search on high-dimensional vectors, used for semantic search, recommendation systems, and AI retrieval. - What are embeddings, and why are they important for FAISS?

Embeddings are vector representations of data (text, images) that capture semantic meaning, allowing FAISS to perform similarity searches effectively. - Explain Flat vs IVF Index in FAISS.

Flat index performs exact brute-force search, while IVF (Inverted File Index) partitions vectors for faster approximate search. - What is Product Quantization (PQ) in FAISS?

PQ reduces memory usage by compressing vectors into smaller representations, enabling efficient large-scale ANN search. - How does FAISS integrate with LangChain or LLM workflows?

FAISS can be used as a LangChain VectorStore to store embeddings and retrieve context for LLM-powered RAG pipelines. - What is ANN (Approximate Nearest Neighbor) search?

ANN speeds up similarity search by trading off slight accuracy for significant performance gains in large datasets. - How does FAISS leverage GPUs?

FAISS uses GPU acceleration for parallelized vector operations, drastically improving search speed for massive datasets. - What are common similarity metrics supported in FAISS?

FAISS supports L2 (Euclidean distance), Inner Product, and Cosine similarity for nearest-neighbor computations. - What is HNSW indexing in FAISS?

HNSW (Hierarchical Navigable Small World) is a graph-based index providing fast ANN search with high recall. - How would you deploy FAISS in a production pipeline?

By serving FAISS as an API/microservice, integrating it with embeddings pipelines (e.g., OpenAI), and monitoring performance with scaling strategies.